From Theory to Practical Implementation

Genetic Algorithm — a Nature Inspired Algorithm

Science and technology often crossover in the most unexpected and innovative way. Charles Darwin likely never imagined that his discovery of natural selection would inspire one of the most widely adopted optimization algorithms in the modern world.

When he explained in his 1859 book “On the Origin of Species” how living things evolve over time through the differential survival and reproduction of individuals with favourable traits, he unknowingly laid the groundwork for what we now call Genetic Algorithm (GA).

Genetic Algorithm has become a powerful tool in design optimization, applicable to both single and Multi-Objective Optimization (MOO). To understand why, let’s explore how Genetic Algorithm differs from other optimization methods, and why these differences make them particularly well-suited for certain design challenges.

Want to learn more about Multi-Objective Optimization? Check out our previous article here!

Using Genetic Algorithm in Design Optimization

Two of the most distinct features of Genetic Algorithm are that they are heuristic-based and gradient-free.

While many engineering designs, like wing shapes, are guided by well-defined physical laws, numerous design challenges cannot be reduced to a simple physical or mathematical equation. Heuristics, relying on common-sense reasoning or rules of thumb, provide a pragmatic approach to tackling these complex problems.

Being gradient-free, Genetic Algorithm does not require derivative information. This makes it particularly suitable for scenarios where gradients are either computationally expensive or simply unavailable. Moreover, gradient-free algorithms are capable of handling discrete design variables directly, making them an ideal candidate for design problems such as combinatorial optimization.

The Theory Behind Genetic Algorithm

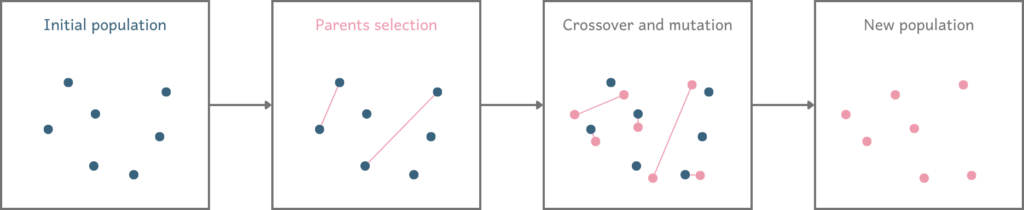

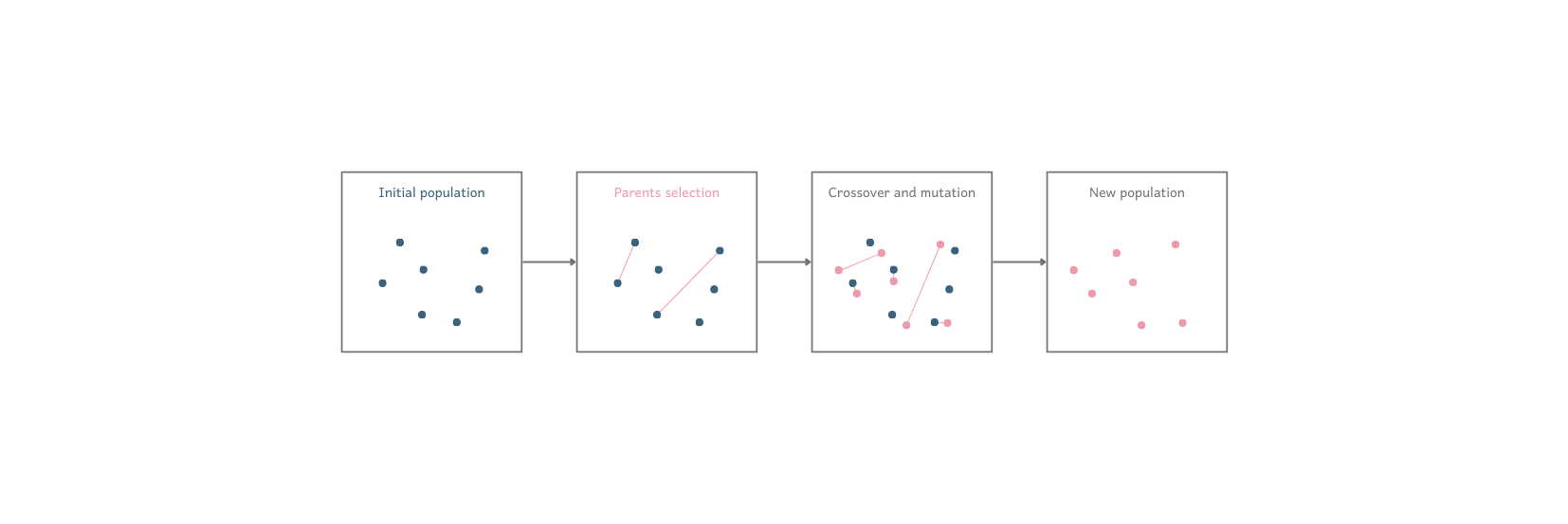

Much like the principles of biological evolution, Genetic Algorithm iteratively improves the solution to an optimization problem. The process begins with an initial population of potential solutions, from which the most promising candidates, based on their fitness, are selected as parents. New generations of candidates are then created through recombination of parent pairs, occasional random mutations to maintain diversity, and elitism where the fittest members are retained to prevent any regression in the quality of the population.

In this section, we’ll dive into the foundational theory behind Genetic Algorithm and break down its core concepts. You’ll find these ideas surprisingly relatable and easy to grasp due to their strong parallels with biological evolution. This solid understanding will better prepare us for the next part, where we apply Genetic Algorithm to a design optimization problem.

Encoding

Before we can apply Genetic Algorithm to our optimization problem, we must first define how the design variables are represented. This process, known as encoding, involves translating variables into a format that algorithms can more effectively manipulate.

Continuous variables can be encoded using binary or real encoding. Real encoding has the advantage of representing numbers with machine-level precision, unlike binary encoding, which is limited by the number of bits.

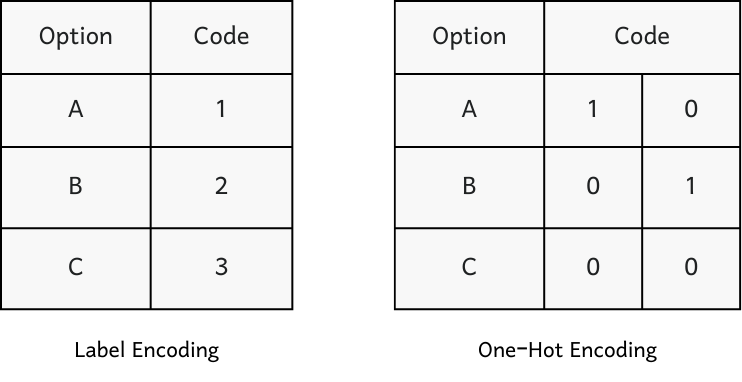

For discrete variables, which are often more commonly encountered in design optimization problems that involve Genetic Algorithm, categorical encoders like Label Encoder or One-Hot Encoder are particularly effective. Label Encoder assigns a unique integer to each option, while One-Hot Encoder transforms these options into distinct binary vectors. Label Encoders are not only intuitive and easy to implement, but also offer lower dimensionality compared to One-Hot Encoders.

Initialisation

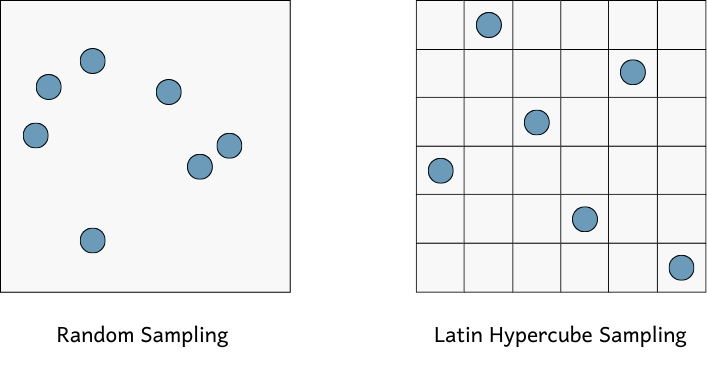

There are several methods to initial a population, with random sampling being the most common. In random sampling, candidate solutions are generated from random combinations of the design variables. While this approach is straightforward and scales well, it often results in samples that are clustered in parts of the design space, limiting the efficiency of a thorough exploration.

To mitigate this issue, Latin Hypercube sampling can be employed. This technique divides the design space into a grid and ensures that each sample occupies a unique cell in every row and column. As a result, the initial population is more uniformly distributed, enhancing the effectiveness of the search process.

Another common technique is to initialise a population with known solutions, typically derived from prior analyses or assessments where they were identified as promising. Incorporating these solutions into the initial population can significantly speed up the optimization process.

Fitness Function

In Genetic Algorithm, the fitness function plays a similar role to the objective function in typical numerical optimization. It offers a measure that guides the algorithm towards increasingly better solutions. Unlike an objective function, which is often grounded in the physical principles underlying the design problem, the fitness function is a heuristic measure. It isn’t based on scientific laws but rather on practical reasoning or past experiences, evaluating how closely a solution approaches the idea outcome.

In single objective optimization, only one fitness function is used. In contrast, Multi-Objective Optimization can involve multiple fitness functions, which may be combined into a single function using a weighted sum approach. By keeping fitness functions separate, a range of nondominated optimal solutions can be identified, rather than restricting the balance between objectives to fixed weights.

Elitism

As candidate solutions evolve through crossover or mutation, there’s a risk that the best solutions might be lost, leading to a decline in overall quality. To prevent this and enhance convergence, elitism is often used. In this approach, a small percentage of the population with the highest fitness scores is preserved and automatically carried over to the next generation. The size of this elite group is a tuneable parameter, typically set to a low value.

Selection

Selection involves choosing parents from the population to form a mating pool for reproduction. While selecting candidates with high fitness may sound ideal, preserving diversity is equally important to ensure a thorough exploration of the design space.

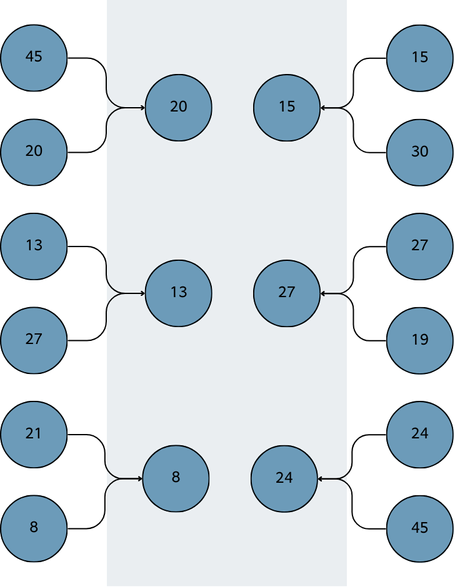

One popular selection method is tournament selection. This approach involves randomly pairing candidates to compete, with the winner determined by their fitness and a biasing probability that gives weaker candidates a chance to be selected.

In Multi-Objective Optimization, tournament selection is adapted to handle multiple fitness functions by introducing a ranking system based on dominance depth. If a candidate is nondominated, meaning no other solution outperforms it in all objectives, it is assigned a dominance depth of one. These rank-1 candidates are then temporarily removed from consideration, allowing the next set of nondominated solutions to be identified and ranked as depth-2, and this process continues for subsequent ranks.

Parents are selected not only based on these ranks but also on their crowding distance, a measure of how distinct each candidate is from others. The ensures that the parents are spread out across the design space, preserving diversity within the population.

Crossover

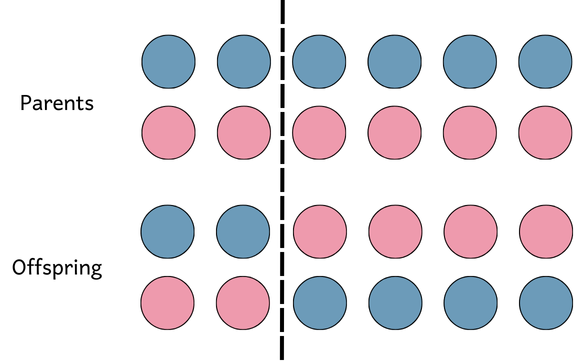

In Genetic Algorithm, offspring are generated through a process known as crossover, which mirrors the mixing of traits in biological reproduction. For continuous variables, common crossover approaches include linear combinations or Simulated Binary Crossover, where new solutions are created by blending the values from parent solutions.

For discrete design variables, k-point crossover is widely used. In this method, a pair of parents produces two offspring by exchanging segments of their genes at specified crossover points. Depending on the number of crossover points, this can range from single-point to two-point or even multi-point crossover, allowing for various combinations of parental traits to be passed on to the next generation.

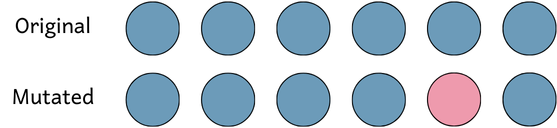

Mutation

Although reproduction and crossover are essential for creating new candidate solutions, they can sometimes lead to the loss of beneficial traits. To counter this and further introduce diversity within the population, mutation is applied.

Different types of mutation are suited for different variable types. For continuous variables, polynomial and Gaussian mutations introduce small, random adjustments to refine solutions. For binary-encoded variables, bit flip mutation randomly changes bits within the solution. In permutation-based design, where order matters, inversion mutation reverses the order of a segment of the solution. Finally, for discrete or categorical variables, choice random mutation replaces one or more genes with another randomly selected value.

Constraints Handling

In Genetic Algorithm, constraints are handled differently compared to traditional mathematical optimization methods. One approach is to prioritise feasible solutions when generating new candidates, ensuring that only those meeting all constraints are considered for reproduction. This method is straightforward and works well with algorithms that rank or sort candidates based on their fitness.

Alternatively, constraints can be managed using a penalty function, which modifies the fitness of infeasible solutions by imposing a penalty based on the degree of constraint violation. This approach transforms the constrained optimization problem into an unconstrained one, allowing Genetic Algorithm to operate as usual while gradually guiding the population towards feasible regions of the solution space.

Stopping Criteria

Unlike traditional mathematical optimization methods, Genetic Algorithm do not rely on strict convergence criteria. Instead, they use more flexible stopping criteria to determine when the optimization process should end.

Simple criteria include setting a maximum number of generations or a time limit, reflecting the available computational budget. More advanced approaches involve setting a tolerance level in either the design or solution space. The optimization process then terminates once the improvement in the solution falls below this predefined threshold, ensuring the solution meets the required precision.

Practical Implementation of Genetic Algorithm

It’s truly fascinating how inspiration from nature can be transformed into a widely adopted optimization algorithm. As we’ve seen, Genetic Algorithm draws directly from the principles of evolution and natural selection, making it intuitive and broadly applicable. These foundational theories are crucial for the practical implementation we’ll explore next.

Combinatorial optimization, often found in system design, involves selecting the optimal combination of objects from a finite set. While manually comparing solutions using a spreadsheet might work for small problems, it quickly becomes intractable as the complexity increases. For example, choosing ten components each with ten options results in 10¹? possible combinations. In such cases, Genetic Algorithm offers an efficient alternative for finding the optimal solution.

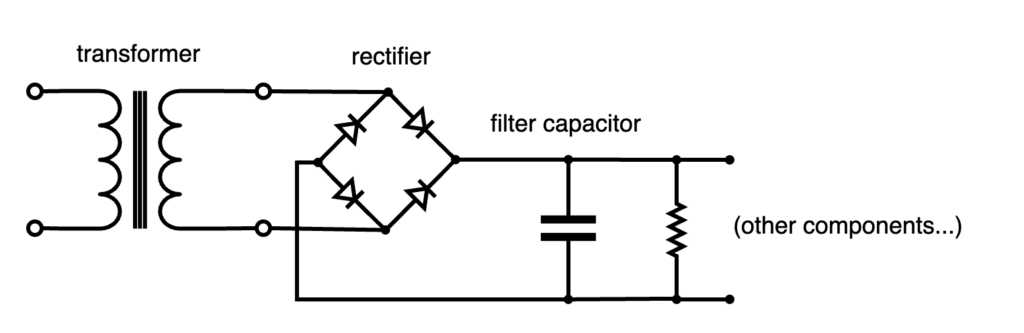

Design Optimization of the Power Supply Unit

Imagine we’re designing a power supply unit, an essential component in many electronics system. This unit comprises eight components, such as a rectifier, transformer, power switch, and input and output filter capacitors.

For each component, there are three to seven different suppliers to choose from, resulting in a total of around 10? possible combinations. Each suppler offers components with varying levels of reliability and cost. The overall reliability of the unit can be simplified as the product of the individual reliabilities, while the total cost is the sum of the individual costs.

As you might expect, lower-cost options often come with reduced reliability. This design problem can be addressed either as a single objective optimization, where cost is minimise or reliability is maximised, or as a Multi-Objective Optimization problem, where the goal is to find the set of optimal solutions that balance both cost and reliability.

Solution Using Pymoo

In this section, I’ll demonstrate how to use the open source Python package pymoo to implement a Genetic Algorithm for solving the power supply unit design optimization problem.

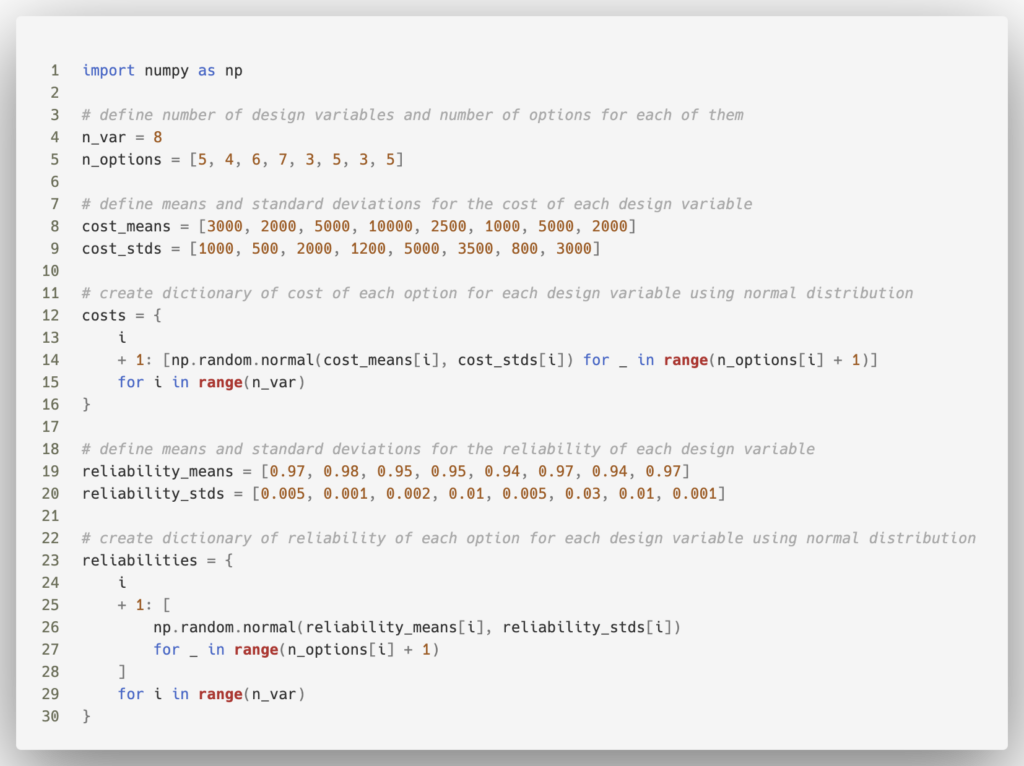

First, we’ll create some dummy data for the cost and reliability of each component option. Here, I’ve used a Normal distribution to randomly generate these values based on predefined means and standard deviations.

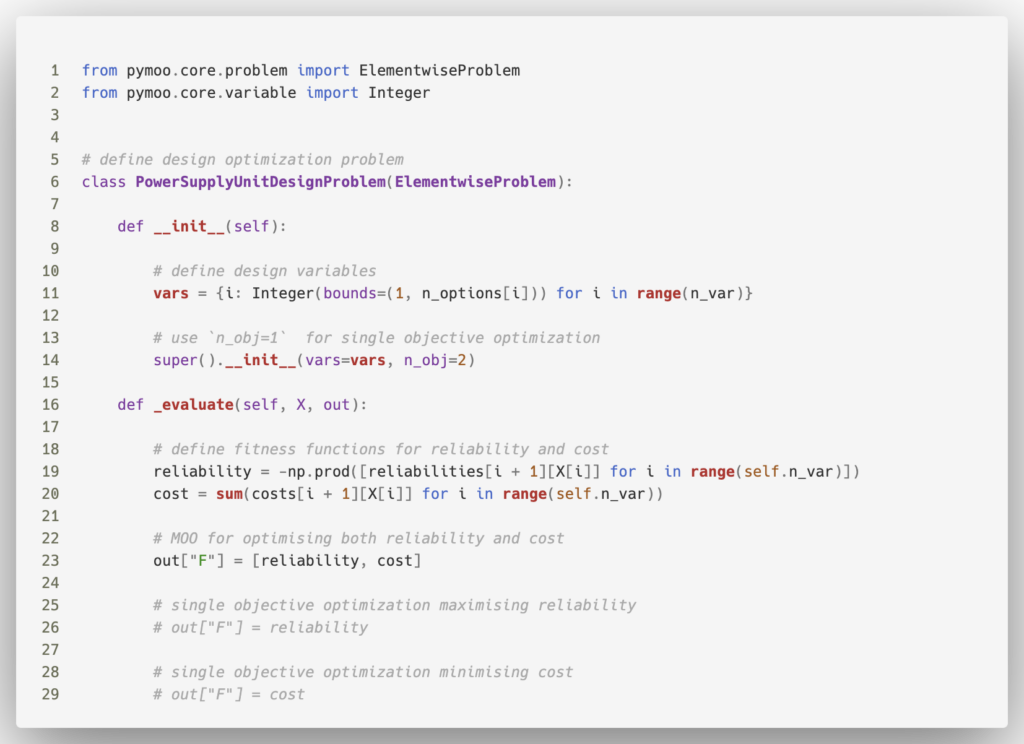

Next, we’ll import pymoo and define our optimization problem. In the __init__ method, I’ve set up eight design variables, each representing a component with a fixed number of options encoded as integers. The _evaluate method is where the fitness function is defined, including either reliability or cost for single objective optimization and both for Multi-Objective Optimization.

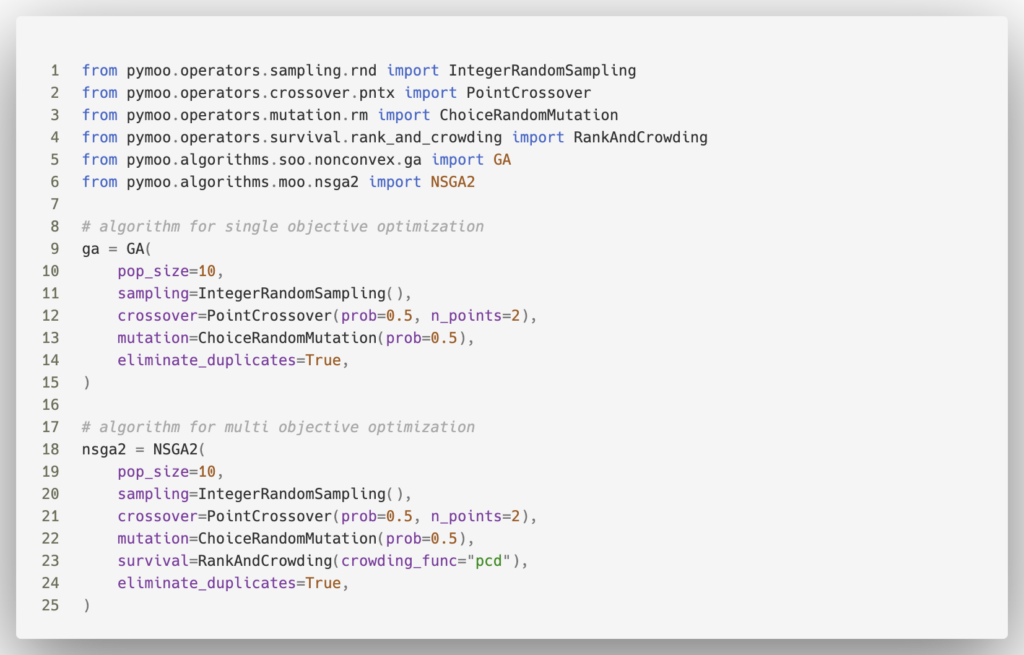

After that, we’ll instantiate the algorithm and configure the parameters for each operation. Since our design variables are integers, we’ll use IntergerRandomSampling for initialisation, PointCrossover for reproduction, and ChoiceRandomMutation for introducing diversity into the population.

For a single objective optimization, we can use the standard Genetic Algorithm. For Multi-Objective Optimization, we’ll use the NSGA2 algorithm which incorporates ranking and crowding mechanisms during the selection stage.

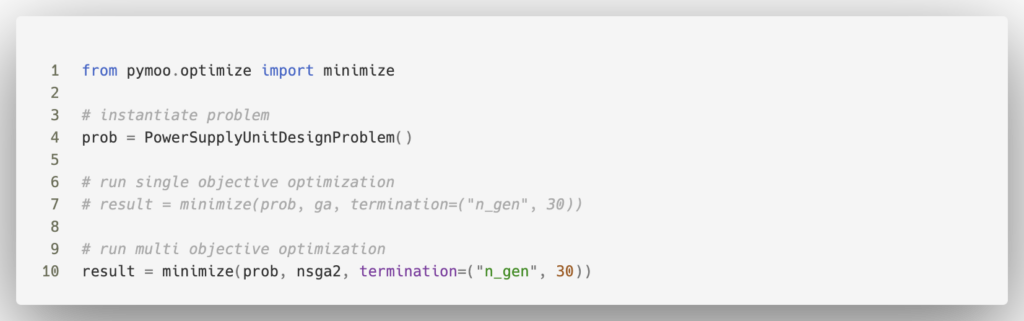

Finally, we’ll run the algorithm using the minimize function, with the stopping criterion set to 30 generations.

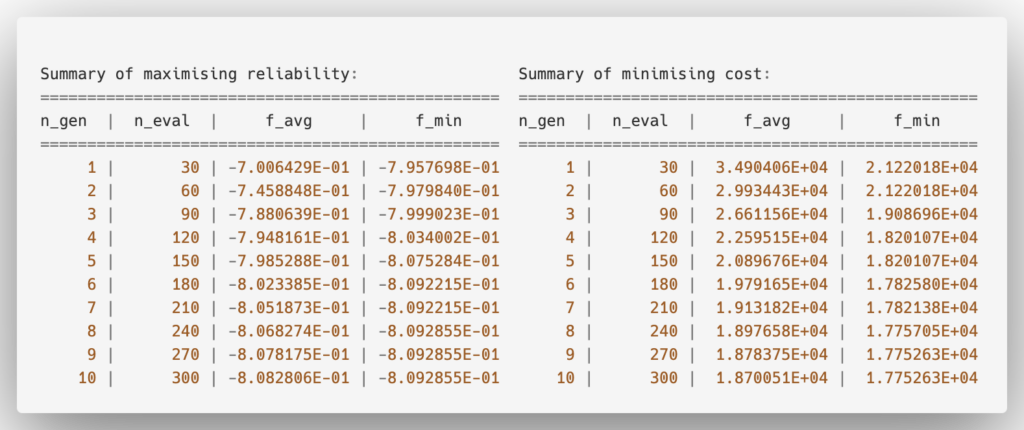

Now let’s review the results. Below is a summary of each generation for the single objective optimization cases, one focusing on minimising cost and the other maximising reliability. From the f_min metric, we can observe that both solutions stabilise within the last two to three generations. The optimal solution for maximising reliability achieves an overall reliability of 80.9%. On the other hand, the solution that minimises cost results in a total of $1,7800.

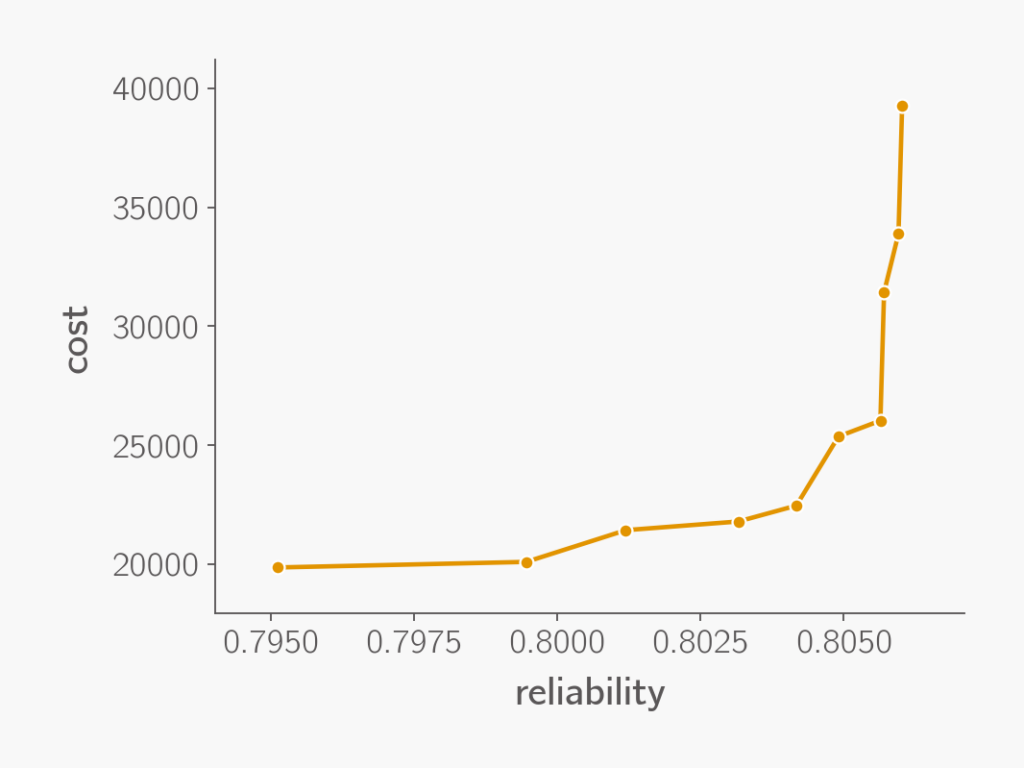

When balancing cost and reliability, the Multi-Objective Optimization produces a Pareto front, revealing the trade-offs between the two objectives. The plot shows a clear trend where reliability increases with cost across different designs. Interestingly, in the lower-left region of the plot, we see that a slight increase in spending on components significantly boosts reliability. However, as we move towards the right side of the plot, the cost rises steeply with only marginal gains in reliability, highlight the potential “sweet spot” in the trade-off between cost and reliability.

Conclusions

Genetic Algorithm has demonstrated remarkable versatility in tackling various design optimization challenges. In this article, we’ve explored the core principles that drive Genetic Algorithm and walked through a practical Python implementation to solve a combinatorial optimization problem often encountered in system design. The ease with which Genetic Algorithm can be applied, combined with their effectiveness in navigating through large design space, underscores their value as a power tool in design optimization.

If you’re eager to learn more about different design optimization techniques, explore the courses offered by OptimiSE Academy here: http://optimise.courses/learn !

Leave a Reply