Optimize Your Products with Effective Design Strategies

From Feasibility to Excellency: The Critical Role of Optimization in System Design

Developing complex systems is challenging, and optimizing them can be even more daunting. However, design optimization plays a pivotal role in engineering, directly impacting both cost and performance of your system. It holds the key to set your product apart from the competition, driving innovation and efficiency.

To help you get the most out of your designs, here are five expert tips that will guide you toward mastering optimization and unlocking the full potential of your product.

Tip 1: Explore the Solution Space Before Optimization

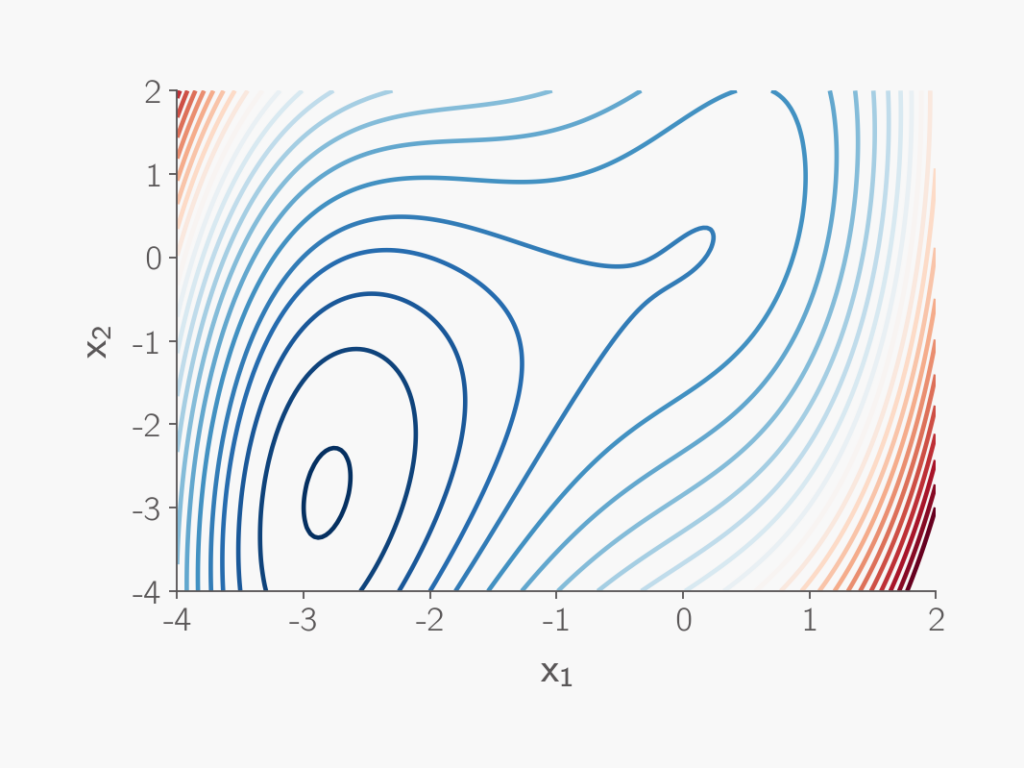

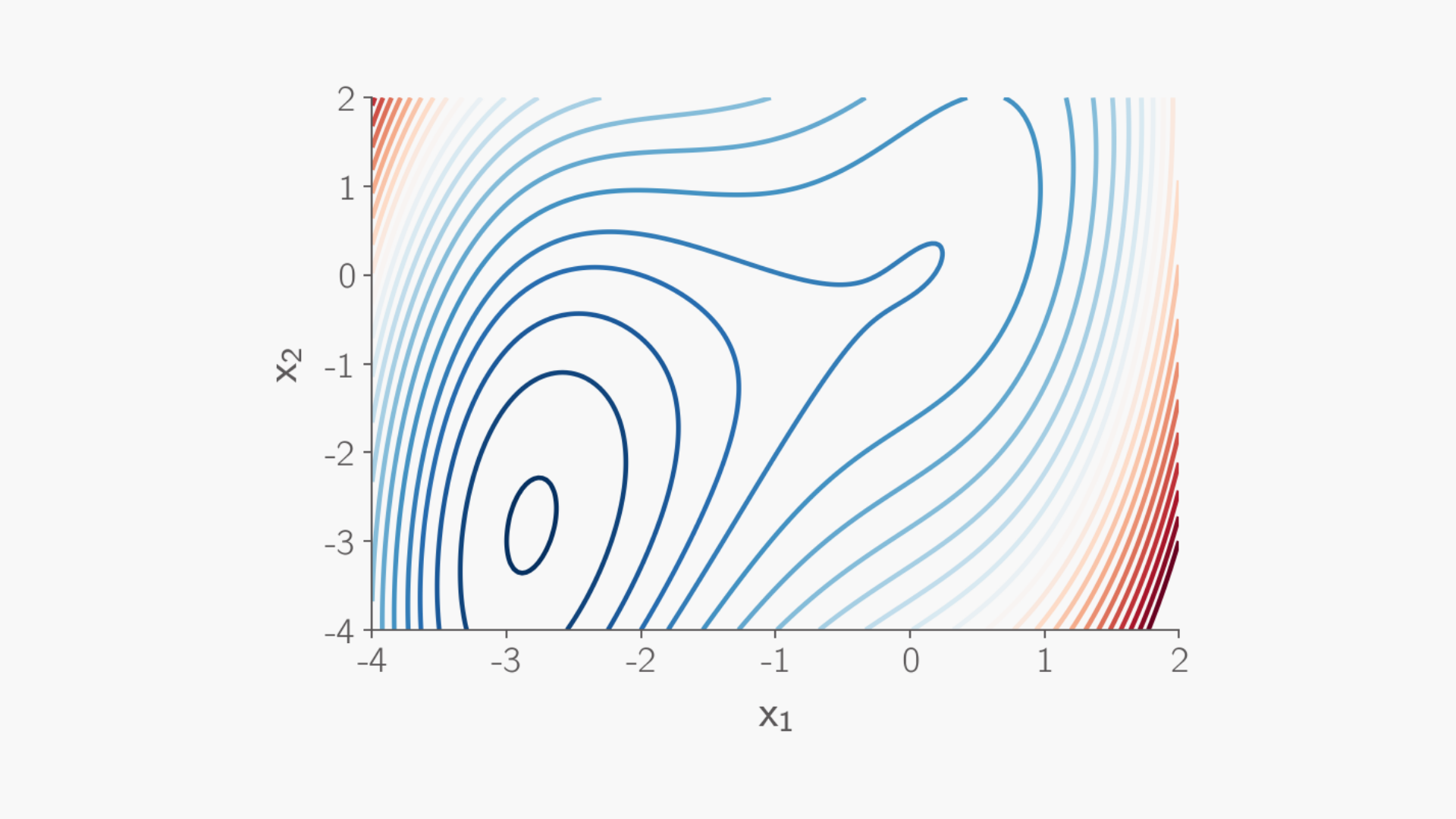

Before rushing into optimization, take a step back to fully explore your solution space. A great way to do this is through a parameter sweep, which allows you to map out how different variables influence your objective function. This exploration gives you valuable insight into the behaviour of your objective function — whether it’s smooth or discontinuous, unimodal or multimodal, and what the trends or computational demands are. By understanding these characteristics early on, you can anticipate challenges and adjust your approach accordingly.

Instead of tackling the entire optimization problem at once, break it down into smaller problems and solve those first. This approach not only helps you grasp the intricacies of the problem but also reveals which computations are costly. From there, you can apply targeted strategies such as surrogate modelling in specific subproblems. Additionally, breaking the problem into smaller pieces allows for easier benchmarking with known results or trends, ensuring your setup is accurate before committing to full-scale optimization.

Tip 2: Pay Attention to Derivatives

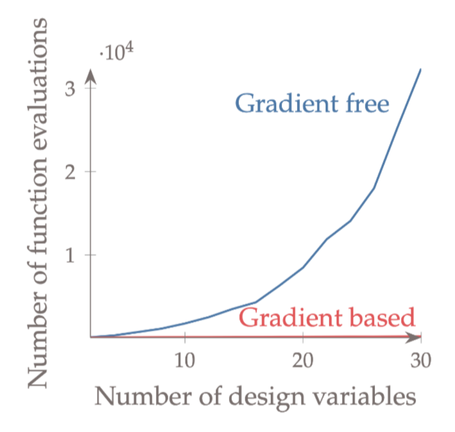

It’s well established that gradient-based optimization algorithms outperform gradient-free methods in terms of efficiency and scalability. This is especially true when dealing with high-dimensional problems that involve numerous design variables. In such cases, gradient-free algorithms can become impractical due to the significant time and computational costs involved.

However, many people shy away from gradient-based algorithms because they struggle with computing accurate derivatives. The accuracy of these derivatives can make or break the entire optimization process. Most gradient-based optimization software defaults to using finite differences to estimate derivatives. While convenient, finite differences often lack the accuracy needed, which can lead to suboptimal results or even failure in optimization.

To avoid this, it’s highly recommended that you supply your own derivatives whenever possible. Modern optimization frameworks often break down the process into smaller components, making it easier to derive analytic derivatives for each part. Tools like sympy can assist in verifying your analytic derivatives, ensuring that your calculations are correct.

Finally, it’s important to verify your derivative computations using alternative methods, such as complex-step method or automatic differentiation, which are often more reliable than finite differences. While finite differences can still be used as a sanity check, keep in mind that it is inherently less accurate and should not be relied upon as the definitive verification method.

Tip 3: Apply Scaling Appropriately

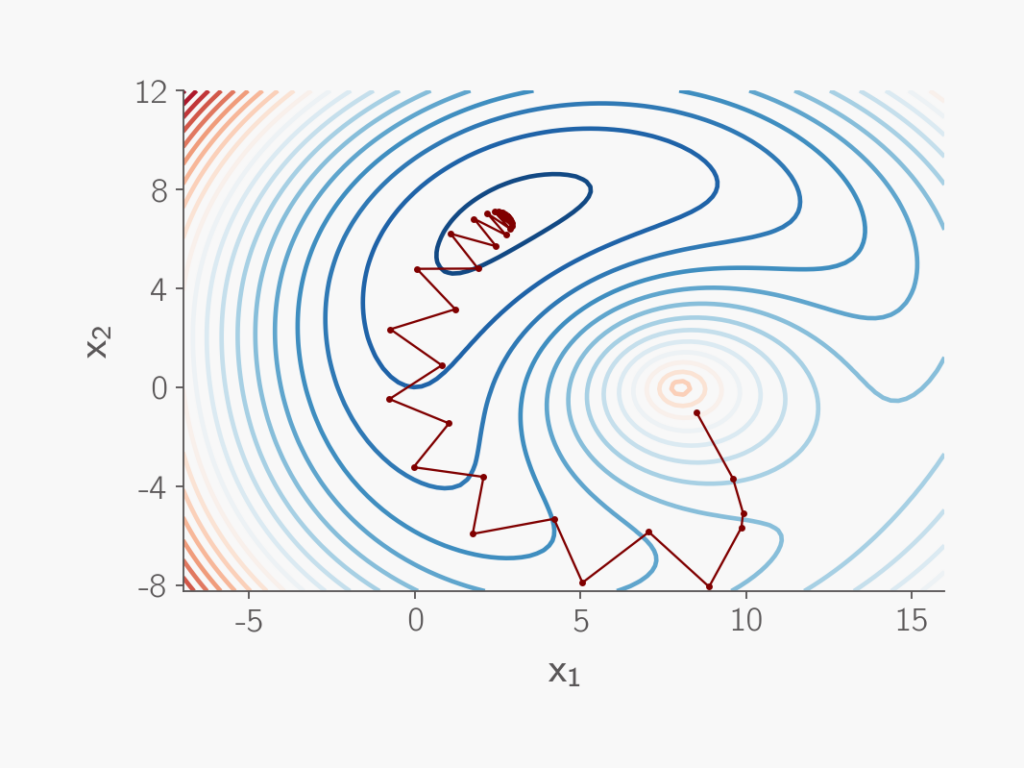

Physical quantities in engineering design can vary dramatically in scale. For instance, in structural optimization, material thickness might be on the order of 10?? meters, while the length of a component could be around 1 meter. If both dimensions are measured in meters, the design space could become like a valley — steep in one direction and shallow in the other.

Optimization algorithms like steepest descent are highly susceptible to scaling. When faced with a poorly scaled design space, the optimizer may struggle to navigate efficiently, zigzagging through the space and having difficulty converging to an optimal solution. Although some optimization algorithms are less sensitive — or even invariant — to scaling, applying appropriate scaling can help significantly improve performance.

A good rule of thumb is to scale all design variables, as well as the objective and constraint functions, so that they fall within the same order of magnitude, ideally around unity. If the objective function is much less sensitive to certain variables than others, a more sophisticated approach may be required. In this case, you can scale the variables and functions so that the gradient elements have similar magnitudes, ensuring a balanced optimization process.

It’s essential, however, to be mindful of where scaling is applied. While the optimiser works with scaled variables, the underlying model still requires unscaled values. Fortunately, most optimization programs provide user-friendly interfaces to specify scaling factors, ensuring the conversion between scaled and unscaled variables occurs seamlessly within the optimization workflow.

Tip 4: Examine and Understand the Implications of Noise

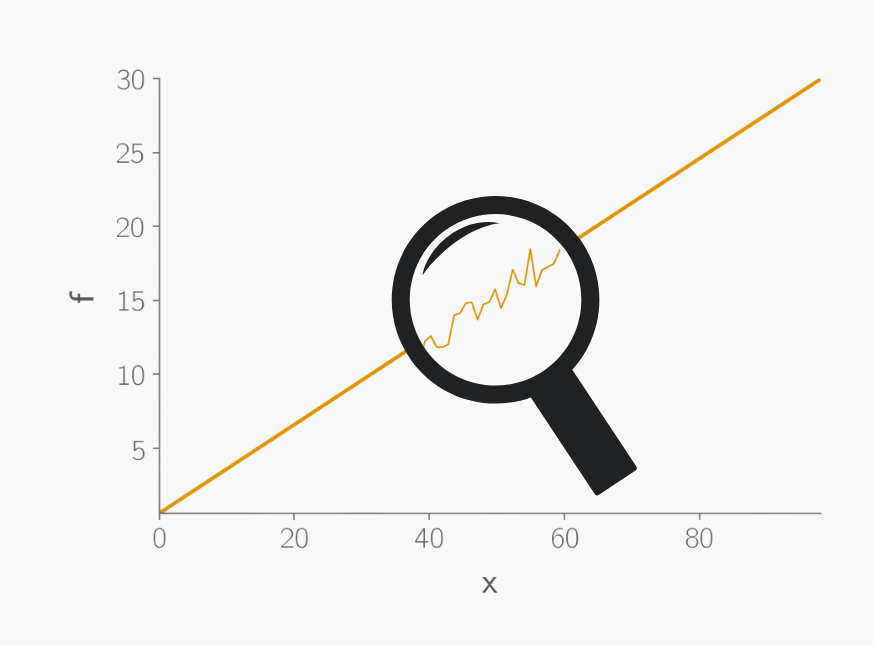

In any optimization process, various sources of error — such as iterative errors, roundoff errors, and truncation errors — can accumulate and become numerical noise in the model. This noise, if not properly managed, can obscure the true behaviour of the system and disrupt the optimization process.

Computers can only represent numbers with a finite accuracy, and this machine precision defines the lower bound fro numerical errors. Examining and understanding the noise level in your system is crucial because it directly influences which optimizers can be used. This is especially important when using gradient-based algorithms as taking derivatives can amplify the noise.

A more nuanced consideration is the method of data handling in your optimization pipeline. Using external files to transfer data between different stages can introduce precision loss and unnecessary delays. A better approach is to pass data directly through memory when possible, preserving precision and speeding up the process by avoiding the latency associated with file read/write operations.

Tip 5: Adopt Good Software Development Practices

Whether your design optimization is part of an independent study or a fully integrated software pipeline, adopting good coding and software development practices is essential. Not only do these practices enhance efficiency, but they also ensure that your optimization process is reusable, maintainable, and sustainable over time.

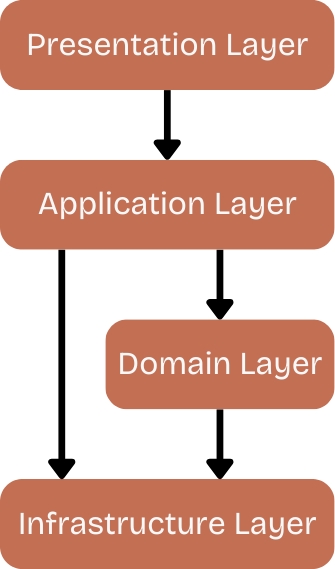

A well-structured software architecture is crucial. For example, employing a Domain-Driven Design[link] approach can help ensure that the software reflects the underlying business or engineering logic in a clear and organised way. This leads to more understandable, modular, and adaptable code, which is particularly valuable when scaling the optimization process or extending it to future projects.

A successful CI/CD (Continuous Integration/Continuous Deployment) pipeline is another key aspect of robust software development. One of the most important components of CI/CD is testing. Ideally, you should write unit tests, integration tests, and end-to-end tests to cover every aspect of the optimization process. These tests make debugging easier, allowing you to quickly isolate and address issues by pinpointing the exact part of the system that’s malfunctioning. Implementing automated code quality checks and enforcing coding standards, such as SOLID, further improves reliability and consistency, ensuring that your codebase remains robust over time.

Version control is another indispensable tool. Using version control systems like Git ensures that changes are tracked and reversible. Adopting standardised versioning approaches such as semantic versioning (SemVer) and conventional commits allow for logical, clear histories, making it easier to identify stable versions and revert to them if needed.

Finally, don’t overlook the importance of thorough documentation. In addition to documenting the functions and their inputs/outputs, it’s beneficial to include type hints to make your code even more readable and understandable for other developers — or for yourself in the future. Well-documented code reduces the time needed to onboard new team members or debug issues, making your optimization pipeline much easier to maintain and scale.

Final Thoughts

Design optimization is a powerful tool that can transform the performance, cost-effectiveness, and competitiveness of complex systems. However, achieving optimal results requires more than just plugging numbers into an algorithm. By carefully exploring the solution space, paying close attention to derivatives, applying appropriate scaling, understanding the impact of noise, and adopting sound software development practices, you can significantly improve the efficiency and reliability of your optimization process.

Each of these steps is essential to ensuring that your system design not only meets the necessary requirements but also performs at its best. Optimization is as much about methodology as it is about theories. By approaching it strategically, you can unlock new levels of innovation and excellence in your projects, driving both short-term gains and long-term success.

Remember, the right optimization practices will not only save time and resources but also open the door to better, more effective designs — giving you a competitive edge in today’s fast-paced engineering landscape.

At OptimiSE, we specialise in optimizing engineering systems and streamlining development processes. Book a free initial consultation today, and let’s discuss how we can transform your organisation’s design approach. Reach out now to get started!

Leave a Reply